I like knowledge. I additionally love JavaScript. But, knowledge and client-side JavaScript are sometimes thought-about mutually unique. The business usually sees knowledge processing and aggregation as a back-end operate, whereas JavaScript is only for displaying the pre-aggregated knowledge. Bandwidth and processing time are seen as large bottlenecks for coping with knowledge on the consumer aspect. And, for probably the most half, I agree. However there are conditions the place processing knowledge within the browser makes excellent sense. In these use instances, how can we achieve success?

Article Continues Under

Take into consideration the info#section2

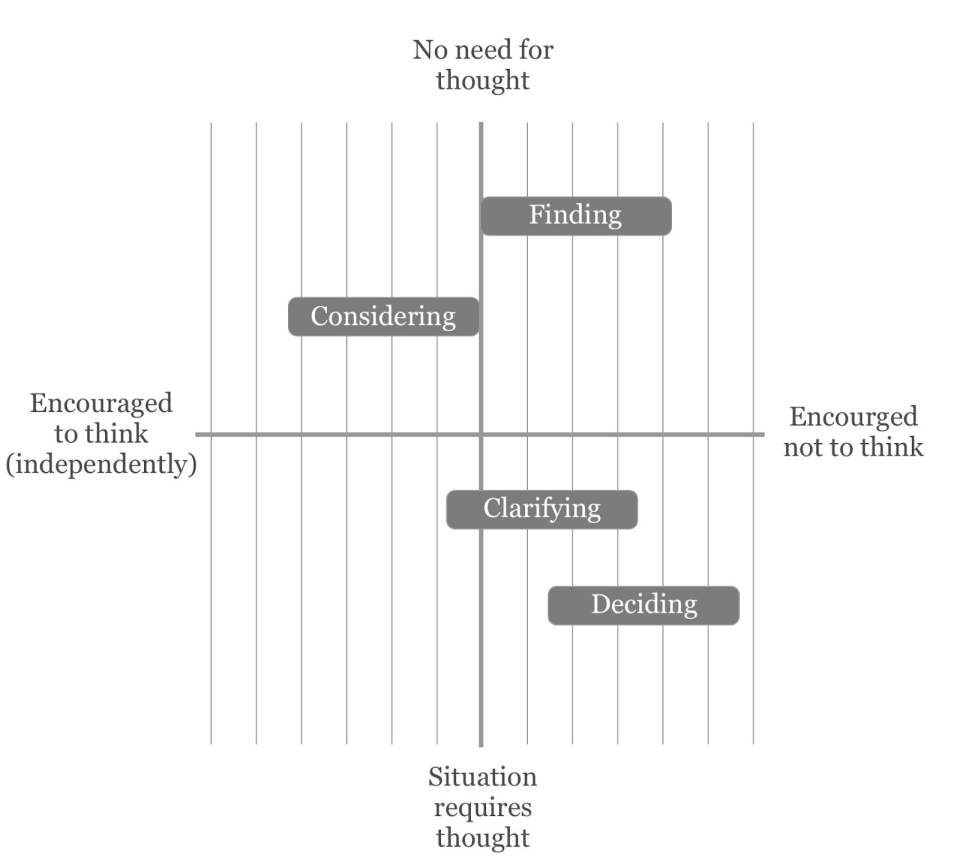

Working with knowledge in JavaScript requires each full knowledge and an understanding of the instruments obtainable with out having to make pointless server calls. It helps to attract a distinction between trilateral knowledge and summarized knowledge.

Trilateral knowledge consists of uncooked, transactional knowledge. That is the low-level element that, by itself, is sort of unattainable to research. On the opposite aspect of the spectrum you will have your summarized knowledge. That is the info that may be offered in a significant and considerate method. We’ll name this our composed knowledge. Most necessary to builders are the info constructions that reside between our transactional particulars and our totally composed knowledge. That is our “candy spot.” These datasets are aggregated however comprise greater than what we want for the ultimate presentation. They’re multidimensional in that they’ve two or extra completely different dimensions (and a number of measures) that present flexibility for the way the info may be offered. These datasets enable your finish customers to form the info and extract data for additional evaluation. They’re small and performant, however provide sufficient element to permit for insights that you just, because the writer, could not have anticipated.

Getting your knowledge into excellent type so you’ll be able to keep away from any and all manipulation within the entrance finish doesn’t should be the objective. As an alternative, get the info decreased to a multidimensional dataset. Outline a number of key dimensions (e.g., folks, merchandise, locations, and time) and measures (e.g., sum, rely, common, minimal, and most) that your consumer could be inquisitive about. Lastly, current the info on the web page with type parts that may slice the info in a method that enables for deeper evaluation.

Creating datasets is a fragile stability. You’ll need to have sufficient knowledge to make your analytics significant with out placing an excessive amount of stress on the consumer machine. This implies developing with clear, concise necessities. Relying on how large your dataset is, you may want to incorporate loads of completely different dimensions and metrics. A number of issues to bear in mind:

- Is the number of content material an edge case or one thing that will likely be used incessantly? Go together with the 80/20 rule: 80% of customers usually want 20% of what’s obtainable.

- Is every dimension finite? Dimensions ought to all the time have a predetermined set of values. For instance, an ever-increasing product stock is perhaps too overwhelming, whereas product classes may work properly.

- When doable, mixture the info—dates particularly. If you will get away with aggregating by years, do it. If you might want to go right down to quarters or months, you’ll be able to, however keep away from something deeper.

- Much less is extra. A dimension that has fewer values is healthier for efficiency. For example, take a dataset with 200 rows. When you add one other dimension that has 4 doable values, probably the most it’ll develop is 200 * 4 = 800 rows. When you add a dimension that has 50 values, it’ll develop 200 * 50 = 10,000 rows. This will likely be compounded with every dimension you add.

- In multidimensional datasets, keep away from summarizing measures that should be recalculated each time the dataset adjustments. For example, in case you plan to indicate averages, you need to embody the overall and the rely. Calculate averages dynamically. This manner, in case you are summarizing the info, you’ll be able to recalculate averages utilizing the summarized values.

Be sure you perceive the info you’re working with earlier than making an attempt any of the above. You could possibly make some incorrect assumptions that result in misinformed selections. Information high quality is all the time a prime precedence. This is applicable to the info you’re each querying and manufacturing.

By no means take a dataset and make assumptions a couple of dimension or a measure. Don’t be afraid to ask for knowledge dictionaries or different documentation in regards to the knowledge that will help you perceive what you’re looking at. Information evaluation shouldn’t be one thing that you just guess. There might be enterprise guidelines utilized, or knowledge might be filtered out beforehand. When you don’t have this data in entrance of you, you’ll be able to simply find yourself composing datasets and visualizations which can be meaningless or—even worse—fully deceptive.

The next code instance will assist clarify this additional. Full code for this instance may be discovered on GitHub.

For our instance we are going to use BuzzFeed’s dataset from “The place U.S. Refugees Come From—and Go—in Charts.” We’ll construct a small app that exhibits us the variety of refugees arriving in a specific state for a specific 12 months. Particularly, we are going to present one of many following relying on the consumer’s request:

- whole arrivals for a state in a given 12 months;

- whole arrivals for all years for a given state;

- and whole arrivals for all states in a given 12 months.

The UI for choosing your state and 12 months could be a easy type:

The code will:

- Ship a request for the info.

- Convert the outcomes to JSON.

- Course of the info.

- Log any errors to the console. (Word: To make sure that step 3 doesn’t execute till after the whole dataset is retrieved, we use the then technique and do all of our knowledge processing inside that block.)

- Show outcomes again to the consumer.

We don’t need to move excessively giant datasets over the wire to browsers for 2 essential causes: bandwidth and CPU issues. As an alternative, we’ll mixture the info on the server with Node.js.

Supply knowledge:

[{"year":2005,"origin":"Afghanistan","dest_state":"Alabama","dest_city":"Mobile","arrivals":0},

{"year":2006,"origin":"Afghanistan","dest_state":"Alabama","dest_city":"Mobile","arrivals":0},

... ]Multidimensional Information:

[{"year": 2005, "state": "Alabama","total": 1386},

{"year": 2005, "state": "Alaska", "total": 989},

... ]

Easy methods to get your knowledge construction into place#section4

AJAX and the Fetch API#section5

There are a variety of the way with JavaScript to retrieve knowledge from an exterior supply. Traditionally you’d use an XHR request. XHR is broadly supported however can also be pretty complicated and requires a number of completely different strategies. There are additionally libraries like Axios or jQuery’s AJAX API. These may be useful to cut back complexity and supply cross-browser help. These is perhaps an possibility in case you are already utilizing these libraries, however we need to go for native options every time doable. Lastly, there may be the more moderen Fetch API. That is much less broadly supported, however it’s easy and chainable. And in case you are utilizing a transpiler (e.g., Babel), it’ll convert your code to a extra broadly supported equal.

For our use case, we’ll use the Fetch API to drag the info into our utility:

window.fetchData = window.fetchData || {};

fetch('./knowledge/mixture.json')

.then(response => {

// when the fetch executes we are going to convert the response

// to json format and move it to .then()

return response.json();

}).then(jsonData => {

// take the ensuing dataset and assign to a worldwide object

window.fetchData.jsonData = jsonData;

}).catch(err => {

console.log("Fetch course of failed", err);

});This code is a snippet from the principle.js within the GitHub repo

The fetch() technique sends a request for the info, and we convert the outcomes to JSON. To make sure that the subsequent assertion doesn’t execute till after the whole dataset is retrieved, we use the then() technique and do all our knowledge processing inside that block. Lastly, we console.log() any errors.

Our objective right here is to determine the important thing dimensions we want for reporting—12 months and state—earlier than we mixture the variety of arrivals for these dimensions, eradicating nation of origin and vacation spot metropolis. You possibly can discuss with the Node.js script /preprocess/index.js from the GitHub repo for extra particulars on how we achieved this. It generates the mixture.json file loaded by fetch() above.

Multidimensional knowledge#section6

The objective of multidimensional formatting is flexibility: knowledge detailed sufficient that the consumer doesn’t must ship a question again to the server each time they need to reply a distinct query, however summarized in order that your utility isn’t churning by means of the complete dataset with each new slice of information. You might want to anticipate the questions and supply knowledge that formulates the solutions. Shoppers need to have the ability to do some evaluation with out feeling constrained or fully overwhelmed.

As with most APIs, we’ll be working with JSON knowledge. JSON is a typical that’s utilized by most APIs to ship knowledge to purposes as objects consisting of title and worth pairs. Earlier than we get again to our use case, let’s have a look at a pattern multidimensional dataset:

const ds = [{

"year": 2005,

"state": "Alabama",

"total": 1386,

"priorYear": 1201

}, {

"year": 2005,

"state": "Alaska",

"total": 811,

"priorYear": 1541

}, {

"year": 2006,

"state": "Alabama",

"total": 989,

"priorYear": 1386

}];Together with your dataset correctly aggregated, we will use JavaScript to additional analyze it. Let’s check out a few of JavaScript’s native array strategies for composing knowledge.

Easy methods to work successfully along with your knowledge by way of JavaScript#section7

Array.filter()#section8

The filter() technique of the Array prototype (Array.prototype.filter()) takes a operate that checks each merchandise within the array, returning one other array containing solely the values that handed the take a look at. It permits you to create significant subsets of the info based mostly on choose dropdown or textual content filters. Offered you included significant, discrete dimensions in your multidimensional dataset, your consumer will be capable of achieve perception by viewing particular person slices of information.

ds.filter(d => d.state === "Alabama");

// End result

[{

state: "Alabama",

total: 1386,

year: 2005,

priorYear: 1201

},{

state: "Alabama",

total: 989,

year: 2006,

priorYear: 1386

}]Array.map()#section9

The map() technique of the Array prototype (Array.prototype.map()) takes a operate and runs each array merchandise by means of it, returning a brand new array with an equal variety of parts. Mapping knowledge offers you the flexibility to create associated datasets. One use case for that is to map ambiguous knowledge to extra significant, descriptive knowledge. One other is to take metrics and carry out calculations on them to permit for extra in-depth evaluation.

Use case #1—map knowledge to extra significant knowledge:

ds.map(d => (d.state.indexOf("Alaska")) ? "Contiguous US" : "Continental US");

// End result

[

"Contiguous US",

"Continental US",

"Contiguous US"

]Use case #2—map knowledge to calculated outcomes:

ds.map(d => Math.spherical(((d.priorYear - d.whole) / d.whole) * 100));

// End result

[-13, 56, 40]Array.scale back()#section10

The scale back() technique of the Array prototype (Array.prototype.scale back()) takes a operate and runs each array merchandise by means of it, returning an aggregated outcome. It’s mostly used to do math, like so as to add or multiply each quantity in an array, though it may also be used to concatenate strings or do many different issues. I’ve all the time discovered this one tough; it’s greatest discovered by means of instance.

When presenting knowledge, you need to be certain it’s summarized in a method that provides perception to your customers. Despite the fact that you will have accomplished some general-level summarizing of the info server-side, that is the place you enable for additional aggregation based mostly on the particular wants of the patron. For our app we need to add up the overall for each entry and present the aggregated outcome. We’ll do that by utilizing scale back() to iterate by means of each file and add the present worth to the accumulator. The ultimate outcome would be the sum of all values (whole) for the array.

ds.scale back((accumulator, currentValue) =>

accumulator + currentValue.whole, 0);

// End result

3364Making use of these features to our use case#section11

As soon as we now have our knowledge, we are going to assign an occasion to the “Get the Information” button that may current the suitable subset of our knowledge. Do not forget that we now have a number of hundred gadgets in our JSON knowledge. The code for binding knowledge by way of our button is in our essential.js:

doc.getElementById("submitBtn").onclick =

operate(e){

e.preventDefault();

let state = doc.getElementById("stateInput").worth || "All"

let 12 months = doc.getElementById("yearInput").worth || "All"

let subset = window.fetchData.filterData(12 months, state);

if (subset.size == 0 )

subset.push({'state': 'N/A', '12 months': 'N/A', 'whole': 'N/A'})

doc.getElementById("output").innerHTML =

`<desk class="desk">

<thead>

<tr>

<th scope="col">State</th>

<th scope="col">12 months</th>

<th scope="col">Arrivals</th>

</tr>

</thead>

<tbody>

<tr>

<td>${subset[0].state}</td>

<td>${subset[0].12 months}</td>

<td>${subset[0].whole}</td>

</tr>

</tbody>

</desk>`

}

When you go away both the state or 12 months clean, that discipline will default to “All.” The next code is offered in /js/essential.js. You’ll need to have a look at the filterData() operate, which is the place we hold the lion’s share of the performance for aggregation and filtering.

// with our knowledge returned from our fetch name, we're going to

// filter the info on the values entered within the textual content bins

fetchData.filterData = operate(yr, state) {

// if "All" is entered for the 12 months, we are going to filter on state

// and scale back the years to get a complete of all years

if (yr === "All") {

let whole = this.jsonData.filter(

// return all the info the place state

// is the same as the enter field

dState => (dState.state === state)

.scale back((accumulator, currentValue) => {

// mixture the totals for each row that has

// the matched worth

return accumulator + currentValue.whole;

}, 0);

return [{'year': 'All', 'state': state, 'total': total}];

}

...

// if a selected 12 months and state are provided, merely

// return the filtered subset for 12 months and state based mostly

// on the provided values by chaining the 2 operate

// calls collectively

let subset = this.jsonData.filter(dYr => dYr.12 months === yr)

.filter(dSt => dSt.state === state);

return subset;

};

// code that shows the info within the HTML desk follows this. See essential.js.When a state or a 12 months is clean, it’ll default to “All” and we are going to filter down our dataset to that exact dimension, and summarize the metric for all rows in that dimension. When each a 12 months and a state are entered, we merely filter on the values.

We now have a working instance the place we:

- Begin with a uncooked, transactional dataset;

- Create a semi-aggregated, multidimensional dataset;

- And dynamically construct a completely composed outcome.

Word that after the info is pulled down by the consumer, we will manipulate the info in quite a few other ways with out having to make subsequent calls to the server. That is particularly helpful as a result of if the consumer loses connectivity, they don’t lose the flexibility to control the info. That is helpful in case you are making a progressive internet app (PWA) that must be obtainable offline. (If you’re unsure in case your internet app must be a PWA, this text may also help.)

When you get a agency deal with on these three strategies, you’ll be able to create nearly any evaluation that you really want on a dataset. Map a dimension in your dataset to a broader class and summarize utilizing scale back. Mixed with a library like D3, you’ll be able to map this knowledge into charts and graphs to permit a completely customizable knowledge visualization.

This text offers a greater sense of what’s doable in JavaScript when working with knowledge. As I discussed, client-side JavaScript is on no account an alternative to translating and remodeling knowledge on the server, the place the heavy lifting must be accomplished. However by the identical token, it additionally shouldn’t be fully dominated out when datasets are handled correctly.