In case you’ve spent sufficient time growing for the online, this piece of suggestions has landed in your inbox since time immemorial:

Article Continues Under

“This picture appears blurry. Can we substitute it with a greater model?”

Each time this suggestions reaches me, I’m inclined to query it: “What in regards to the picture appears dangerous to you, and might you inform me why?”

That’s a considerably unfair query to counter with. The criticism is rooted in a subjective notion of picture high quality, which in flip is influenced by many elements. Some are technical, such because the export high quality of the picture or the compression methodology (usually lossy, as is the case with JPEG-encoded pictures). Others are extra intuitive or perceptual, equivalent to content material of the picture and the way compression artifacts mingle inside. Even perhaps efficiency performs a job we’re not solely conscious of.

Fielding this type of suggestions for a few years finally lead me to design and develop a picture high quality survey, which was my first go at constructing a analysis undertaking on the net. I began with twenty-five pictures shot by knowledgeable photographer. With them, I generated a big pool of pictures at numerous high quality ranges and sizes. Photos have been served randomly from this pool to customers who have been requested to charge what they considered their high quality.

Outcomes from the primary spherical have been fascinating, however not solely clear: customers appeared to generally tend to overestimate the precise high quality of pictures, and poor efficiency appeared to have a detrimental influence on perceptions of picture high quality, however this couldn’t be said conclusively. Quite a few UX and technical points made it essential to implement vital enhancements and conduct a second spherical of analysis. In lieu of spinning my wheels attempting to extract conclusions from the primary spherical outcomes, I made a decision it could be greatest to enhance the survey as a lot as potential, and conduct one other spherical of analysis to get higher knowledge. This text chronicles how I first constructed the survey, after which how I subsequently listened to person suggestions to enhance it.

Defining the analysis#section2

Of the topics inside internet efficiency, picture optimization is very huge. There’s a big selection of codecs, encodings, and optimization instruments, all of that are designed to make pictures sufficiently small for internet use whereas sustaining cheap visible high quality. Placing the steadiness between pace and high quality is actually what picture optimization is all about.

This steadiness between efficiency and visible high quality prompted me to contemplate how folks understand picture high quality. Lossy picture high quality, specifically. Ultimately, this practice of thought result in a sequence of questions spurring the design and growth of a picture high quality notion survey. The thought of the survey is that customers are offering subjective assessments on high quality. That is carried out by asking contributors to charge pictures with out an goal reference for what’s “good.” That is, in any case, how folks view pictures in situ.

A phrase on surveys#section3

Any time we wish to quantify person conduct, it’s inevitable {that a} survey is at the very least thought-about, if not in the end chosen to assemble knowledge from a bunch of individuals. In spite of everything, surveys are good when your aim is to get one thing measurable. Nevertheless, the survey is a seductively harmful device, as Erika Corridor cautions. They’re straightforward to make and conduct, and are routinely abused of their dissemination. They’re not nice instruments for assessing previous conduct. They’re simply as dangerous (if not worse) at predicting future conduct. For instance, the 1–10 scale usually employed by buyer satisfaction surveys don’t actually say a lot of something about how glad prospects truly are or how probably they’ll be to purchase a product sooner or later.

The unlucky actuality, nonetheless, is that in lieu of my lording over a whole bunch of contributors in particular person, the survey is the one really sensible device I’ve to measure how folks understand picture high quality in addition to if (and doubtlessly how) efficiency metrics correlate to these perceptions. Once I designed the survey, I stored with the next tips:

- Don’t ask contributors about something aside from what their perceptions are within the second. By the point a participant has moved on, their recollection of what they simply did quickly diminishes as time elapses.

- Don’t assume contributors know all the pieces you do. Information them with related copy that succinctly describes what you anticipate of them.

- Don’t ask contributors to offer assessments with coarse inputs. Use an enter sort that allows them to finely assess picture high quality on a scale congruent with the lossy picture high quality encoding vary.

All we are able to do going ahead is acknowledge we’re deciphering the info we collect beneath the idea that contributors are being truthful and perceive the duty given to them. Even when the notion metrics are discarded from the info, there are nonetheless some goal efficiency metrics gathered that would inform a compelling story. From right here, it’s a matter of defining the questions that may drive the analysis.

Asking the proper questions#section4

In analysis, you’re in search of solutions to questions. Within the case of this specific effort, I needed solutions to those questions:

- How correct are folks’s perceptions of lossy picture high quality in relation to precise high quality?

- Do folks understand the standard of JPEG pictures in another way than WebP pictures?

- Does efficiency play a job in all of this?

These are vital questions. To me, nonetheless, answering the final query was the first aim. However the street to solutions was (and continues to be) a posh journey of design and growth selections. Let’s begin out by overlaying a few of the tech used to assemble data from survey contributors.

Sniffing out machine and browser traits#section5

When measuring how folks understand picture high quality, gadgets have to be thought-about. In spite of everything, any given machine’s display might be roughly succesful than others. Fortunately, HTML options equivalent to srcset and image are extremely applicable for delivering the perfect picture for any given display. That is very important as a result of one’s notion of picture high quality could be adversely affected if a picture is ill-fit for a tool’s display. Conversely, efficiency could be negatively impacted if an exceedingly high-quality (and subsequently behemoth) picture is distributed to a tool with a small display. When sniffing out potential relationships between efficiency and perceived high quality, these are elements that deserve consideration.

With regard to browser traits and situations, JavaScript offers us loads of instruments for figuring out vital points of a person’s machine. For example, the currentSrc property reveals which picture is being proven from an array of responsive pictures. Within the absence of currentSrc, I can considerably safely assume assist for srcset or image is missing, and fall again to the img tag’s src worth:

const surveyImage = doc.querySelector(".survey-image");

let loadedImage = surveyImage.currentSrc || surveyImage.src;The place display functionality is worried, devicePixelRatio tells us the pixel density of a given machine’s display. Within the absence of devicePixelRatio, you could safely assume a fallback worth of 1:

let dpr = window.devicePixelRatio || 1;devicePixelRatio enjoys glorious browser assist. These few browsers that don’t assist it (i.e., IE 10 and beneath) are extremely unlikely for use on excessive density shows.

The stalwart getBoundingClientRect methodology retrieves the rendered width of an img aspect, whereas the HTMLImageElement interface’s full property determines whether or not a picture has completed loaded. The latter of those two is vital, as a result of it could be preferable to discard particular person ends in conditions the place pictures haven’t loaded.

In instances the place JavaScript isn’t out there, we are able to’t gather any of this knowledge. Once we gather rankings from customers who’ve JavaScript turned off (or are in any other case unable to run JavaScript), I’ve to just accept there might be gaps within the knowledge. The fundamental data we’re nonetheless capable of gather does present some worth.

Sniffing for WebP assist#section6

As you’ll recall, one of many preliminary questions requested was how customers perceived the standard of WebP pictures. The HTTP Settle for request header advertises WebP assist in browsers like Chrome. In such instances, the Settle for header may look one thing like this:

Settle for: picture/webp,picture/apng,picture/*,*/*;q=0.8As you possibly can see, the WebP content material sort of picture/webp is without doubt one of the marketed content material sorts within the header content material. In server-side code, you possibly can verify Settle for for the picture/webp substring. Right here’s how that may look in Categorical back-end code:

const WebP = req.get("Settle for").indexOf("picture/webp") !== -1 ? true : false;On this instance, I’m recording the browser’s WebP assist standing to a JavaScript fixed I can use later to change picture supply. I might use the image aspect with a number of supplys and let the browser work out which one to make use of based mostly on the supply aspect’s sort attribute worth, however this method has clear benefits. First, it’s much less markup. Second, the survey shouldn’t at all times select a WebP supply just because the browser is able to utilizing it. For any given survey specimen, the app ought to randomly determine between a WebP or JPEG picture. Not all contributors utilizing Chrome ought to charge solely WebP pictures, however moderately a random smattering of each codecs.

Recording efficiency API knowledge#section7

You’ll recall that one of many earlier questions I got down to reply was if efficiency impacts the notion of picture high quality. At this stage of the online platform’s growth, there are a number of APIs that support within the seek for a solution:

- Navigation Timing API (Degree 2): This API tracks efficiency metrics for web page masses. Greater than that, it offers perception into particular web page loading phases, equivalent to redirect, request and response time, DOM processing, and extra.

- Navigation Timing API (Degree 1): Just like Degree 2 however with key variations. The timings uncovered by Degree 1 of the API lack the accuracy as these in Degree 2. Moreover, Degree 1 metrics are expressed in Unix time. Within the survey, knowledge is just collected from Degree 1 of the API if Degree 2 is unsupported. It’s removed from ideally suited (and in addition technically out of date), however it does assist fill in small gaps.

- Useful resource Timing API: Just like Navigation Timing, however Useful resource Timing gathers metrics on numerous loading phases of web page assets moderately than the web page itself. Of the all of the APIs used within the survey, Useful resource Timing is used most, because it helps collect metrics on the loading of the picture specimen the person charges.

- Server Timing: In choose browsers, this API is introduced into the Navigation Timing Degree 2 interface when a web page request replies with a

Server-Timingresponse header. This header is open-ended and could be populated with timings associated to back-end processing phases. This was added to spherical two of the survey to quantify back-end processing time on the whole. - Paint Timing API: At present solely in Chrome, this API stories two paint metrics: first paint and first contentful paint. As a result of a major slice of customers on the net use Chrome, we could be capable of observe relationships between perceived picture high quality and paint metrics.

Utilizing these APIs, we are able to document efficiency metrics for many contributors. Right here’s a simplified instance of how the survey makes use of the Useful resource Timing API to assemble efficiency metrics for the loaded picture specimen:

// Get details about the loaded picture

const surveyImageElement = doc.querySelector(".survey-image");

const fullImageUrl = surveyImageElement.currentSrc || surveyImageElement.src;

const imageUrlParts = fullImageUrl.cut up("/");

const imageFilename = imageUrlParts[imageUrlParts.length - 1];

// Test for efficiency API strategies

if ("efficiency" in window && "getEntriesByType" in efficiency) {

// Get entries from the Useful resource Timing API

let assets = efficiency.getEntriesByType("useful resource");

// Guarantee assets have been returned

if (typeof assets === "object" && assets.size > 0) {

assets.forEach((useful resource) => {

// Test if the useful resource is for the loaded picture

if (useful resource.title.indexOf(imageFilename) !== -1) {

// Entry useful resource pictures for the picture right here

}

});

}

}If the Useful resource Timing API is accessible, and the getEntriesByType methodology returns outcomes, an object with timings is returned, trying one thing like this:

{

connectEnd: 1156.5999999947962,

connectStart: 1156.5999999947962,

decodedBodySize: 11110,

domainLookupEnd: 1156.5999999947962,

domainLookupStart: 1156.5999999947962,

period: 638.1000000037602,

encodedBodySize: 11110,

entryType: "useful resource",

fetchStart: 1156.5999999947962,

initiatorType: "img",

title: "https://imagesurvey.web site/img-round-2/1-1024w-c2700e1f2c4f5e48f2f57d665b1323ae20806f62f39c1448490a76b1a662ce4a.webp",

nextHopProtocol: "h2",

redirectEnd: 0,

redirectStart: 0,

requestStart: 1171.6000000014901,

responseEnd: 1794.6999999985565,

responseStart: 1737.0999999984633,

secureConnectionStart: 0,

startTime: 1156.5999999947962,

transferSize: 11227,

workerStart: 0

}I seize these metrics as contributors charge pictures, and retailer them in a database. Down the street once I wish to write queries and analyze the info I’ve, I can discuss with the Processing Mannequin for the Useful resource and Navigation Timing APIs. With SQL and knowledge at my fingertips, I can measure the distinct phases outlined by the mannequin and see if correlations exist.

Having mentioned the technical underpinnings of how knowledge could be collected from survey contributors, let’s shift the main focus to the survey’s design and person flows.

Designing the survey#section8

Although surveys are inclined to have simple designs and person flows relative to different websites, we should stay cognizant of the person’s path and the impediments a person might face.

The entry level#section9

When contributors arrive on the house web page, we wish to be direct in our communication with them. The house web page intro copy greets contributors, offers them a succinct rationalization of what to anticipate, and presents two navigation selections:

From right here, contributors both begin the survey or learn a privateness coverage. If the person decides to take the survey, they’ll attain a web page politely asking them what their skilled occupation is and requesting them to reveal any eyesight situations. The fields for these questions could be left clean, as some will not be comfy disclosing this type of data. Past this level, the survey begins in earnest.

The survey primer#section10

Earlier than the person begins score pictures, they’re redirected to a primer web page. This web page describes what’s anticipated of contributors, and explains how you can charge pictures. Whereas the survey is promoted on design and growth shops the place readers commonly work with imagery on the net, a primer remains to be helpful in getting everybody on the identical web page. The primary paragraph of the web page stresses that customers are score picture high quality, not picture content material. That is vital. Absent any context, contributors could certainly charge pictures for his or her content material, which isn’t what we’re asking for. After this clarification, the idea of lossy picture high quality is demonstrated with the next diagram:

Lastly, the perform of the score enter is defined. This might probably be inferred by most, however the explanatory copy helps take away any remaining ambiguity. Assuming your person is aware of all the pieces you do is just not essentially sensible. What appears apparent to at least one is just not at all times so to a different.

The picture specimen web page#section11

This web page is the primary occasion and is the place contributors assess the standard of pictures proven to them. It comprises two areas of focus: the picture specimen and the enter used to charge the picture’s high quality.

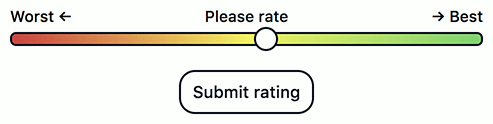

Let’s discuss a bit out of order and talk about the enter first. I mulled over just a few choices when it got here to which enter sort to make use of. I thought-about a choose enter with coarsely predefined selections, an enter with a sort of quantity, and different selections. What appeared to take advantage of sense to me, nonetheless, was a slider enter with a sort of vary.

A slider enter is extra intuitive than a textual content enter, or a choose aspect populated with numerous selections. As a result of we’re asking for a subjective evaluation about one thing with such a wide range of interpretation, a slider permits contributors extra granularity of their assessments and lends additional accuracy to the info collected.

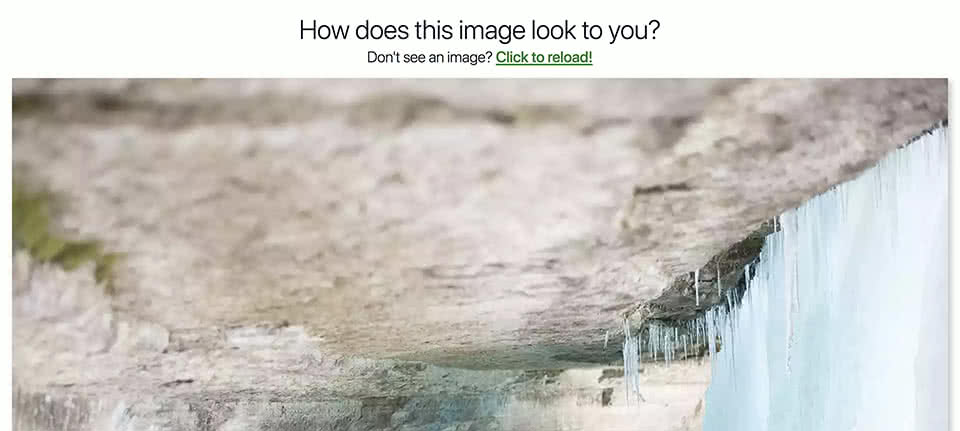

Now let’s discuss in regards to the picture specimen and the way it’s chosen by the back-end code. I made a decision early on within the survey’s growth that I needed pictures that weren’t outstanding in current inventory picture collections. I additionally needed uncompressed sources so I wouldn’t be presenting contributors with recompressed picture specimens. To attain this, I procured pictures from an area photographer. The twenty-five pictures I settled on have been minimally processed uncooked pictures from the photographer’s digital camera. The consequence was a cohesive set of pictures that felt visually associated to one another.

To correctly gauge notion throughout your complete spectrum of high quality settings, I wanted to generate every picture from the aforementioned sources at ninety-six completely different high quality settings starting from 5 to 100. To tài khoản for the various widths and pixel densities of screens within the wild, every picture additionally wanted to be generated at 4 completely different widths for every high quality setting: 1536, 1280, 1024, and 768 pixels, to be precise. Simply the job srcset was made for!

To prime all of it off, pictures additionally wanted to be encoded in each JPEG and WebP codecs. In consequence, the survey attracts randomly from 768 pictures per specimen throughout your complete high quality vary, whereas additionally delivering the perfect picture for the participant’s display. Which means that throughout the twenty-five picture specimens contributors consider, the survey attracts from a pool of 19,200 pictures whole.

With the conception and design of the survey coated, let’s segue into how the survey was improved by implementing person suggestions into the second spherical.

Listening to suggestions#section12

Once I launched spherical one of many survey, suggestions got here flooding in from designers, builders, accessibility advocates, and even researchers. Whereas my intentions have been good, I inevitably missed some vital points, which made it essential to conduct a second spherical. Iteration and refinement are vital to bettering the usefulness of a design, and this survey was no exception. Once we enhance designs with person suggestions, we take a undertaking from common to one thing extra memorable. Attending to that time means taking suggestions in stride and addressing distinct, actionable gadgets. Within the case of the survey, incorporating suggestions not solely yielded a greater person expertise, it improved the integrity of the info collected.

Constructing a greater slider enter#section13

Although the primary spherical of the survey was serviceable, I bumped into points with the slider enter. In spherical one of many survey, that enter appeared like this:

There have been two recurring complaints relating to this particular implementation. The primary was that contributors felt they needed to align their score to one of many labels beneath the slider observe. This was undesirable for the straightforward incontrovertible fact that the slider was chosen particularly to encourage contributors to offer nuanced assessments.

The second criticism was that the submit button was disabled till the person interacted with the slider. This design selection was meant to stop contributors from merely clicking the submit button on each web page with out score pictures. Sadly, this implementation was unintentionally hostile to the person and wanted enchancment, as a result of it blocked customers from score pictures with no clear and apparent rationalization as to why.

Fixing the issue with the labels meant redesigning the slider because it appeared in Determine 3. I eliminated the labels altogether to remove the temptation of customers to align their solutions to them. Moreover, I modified the slider background property to a gradient sample, which additional implied the granularity of the enter.

The submit button concern was a matter of how customers have been prompted. In spherical one the submit button was seen, but the disabled state wasn’t apparent sufficient to some. After consulting with a colleague, I discovered an answer for spherical two: in lieu of the submit button being initially seen, it’s hidden by some information copy:

As soon as the person interacts with the slider and charges the picture, a change occasion hooked up to the enter fires, which hides the information copy and replaces it with the submit button:

This resolution is much less ambiguous, and it funnels contributors down the specified path. If somebody with JavaScript disabled visits, the information copy isn’t proven, and the submit button is instantly usable. This isn’t ideally suited, however it doesn’t shut out contributors with out JavaScript.

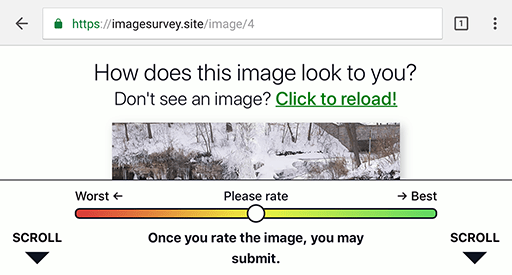

Addressing scrolling woes#section14

The survey web page works particularly nicely in portrait orientation. Contributors can see all (or most) of the picture with no need to scroll. In browser home windows or cell gadgets in panorama orientation, nonetheless, the survey picture could be bigger than the viewport:

Working with such restricted vertical actual property is hard, particularly on this case the place the slider must be mounted to the underside of the display (which addressed an earlier little bit of person suggestions from spherical one testing). After discussing the problem with colleagues, I made a decision that animated indicators within the corners of the web page might sign to customers that there’s extra of the picture to see.

When the person hits the underside of the web page, the scroll indicators disappear. As a result of animations could also be jarring for sure customers, a prefers-reduced-motion media question is used to show off this (and all different) animations if the person has a said choice for lowered movement. Within the occasion JavaScript is disabled, the scrolling indicators are at all times hidden in portrait orientation the place they’re much less prone to be helpful and at all times seen in panorama the place they’re doubtlessly wanted essentially the most.

Avoiding overscaling of picture specimens#section15

One concern that was dropped at my consideration from a coworker was how the survey picture appeared to broaden boundlessly with the viewport. On cell gadgets this isn’t such an issue, however on massive screens and even modestly sized high-density shows, pictures could be scaled excessively. As a result of the responsive img tag’s srcset attribute specifies a most decision picture of 1536w, a picture can start to overscale at as “small” at sizes over 768 pixels extensive on gadgets with a tool pixel ratio of two.

Some overscaling is inevitable and acceptable. Nevertheless, when it’s extreme, compression artifacts in a picture can grow to be extra pronounced. To handle this, the survey picture’s max-width is about to 1536px for traditional shows as of spherical two. For gadgets with a tool pixel ratio of two or increased, the survey picture’s max-width is about to half that at 768px:

This minor (but vital) repair ensures that pictures aren’t scaled past an inexpensive most. With a fairly sized picture asset within the viewport, contributors will assess pictures near or at a given picture asset’s pure dimensions, significantly on massive screens.

Consumer suggestions is effective. These and different UX suggestions gadgets I included improved each the perform of the survey and the integrity of the collected knowledge. All it took was sitting down with customers and listening to them.

As spherical two of the survey will get beneath approach, I’m hoping the info gathered reveals one thing thrilling in regards to the relationship between efficiency and the way folks understand picture high quality. If you wish to be part of the trouble, please take the survey. When spherical two concludes, hold an eye fixed out right here for a abstract of the outcomes!

Thanks to those that gave their helpful time and suggestions to make this text nearly as good because it might probably be: Aaron Gustafson, Jeffrey Zeldman, Brandon Gregory, Rachel Andrew, Bruce Hyslop, Adrian Roselli, Meg Dickey-Kurdziolek, and Nick Tucker.

Further because of those that helped enhance the picture high quality survey: Mandy Tensen, Darleen Denno, Charlotte Dann, Tim Dunklee, and Thad Roe.