It’s necessary to keep in mind that HTTP/2-specific optimizations might grow to be efficiency liabilities for HTTP/1 customers. Within the remaining a part of this collection, we’ll discuss concerning the efficiency implications of such a technique and the way construct instruments may also help you handle HTTP/1- and HTTP/2-specific belongings.

Article Continues Beneath

Our generalized instance from the earlier article reveals how we are able to adapt supply of website belongings to a consumer’s connection. Now let’s see how this impacts efficiency in the true world.

Observing efficiency outcomes#section2

Creating a testing methodology#section3

Low pace cellular connections are fairly frequent within the growing world. Out of curiosity, I needed to simulate HTTP/1 and HTTP/2 situations with my very own website on an precise low pace cellular connection.

In a surprisingly fortuitous flip of occasions, I ran out of excessive pace cellular knowledge the month I used to be planning to check. In lieu of additional prices, my supplier merely throttles the connection pace to 2G. Good timing, so I tethered to my iPhone and acquired began.

To gauge efficiency in any given state of affairs, you want a great testing methodology. I needed to check three distinct situations:

- Website optimized for HTTP/2: That is the location’s default optimization technique when served utilizing HTTP/2. If the detected protocol is HTTP/2, the next variety of small, granular belongings is served.

- Website not optimized for HTTP/1: This state of affairs happens when HTTP/2 isn’t supported by the browser and when supply of content material isn’t tailored in accordance with these limitations. In different phrases, content material and belongings are optimized for HTTP/2 supply, making them suboptimal for HTTP/1 customers.

- Website optimized for HTTP/1: HTTP/2-incompatible browsers are supplied with HTTP/1 optimizations after the content material supply technique adapts to satisfy the browser’s limitations.

The instrument I chosen for testing was sitespeed.io. sitespeed.io is a nifty command line instrument—installable through Node’s package deal supervisor (npm)—that’s filled with choices for automating efficiency testing. sitespeed.io collects varied web page efficiency metrics every time it finishes a session.

To gather efficiency knowledge in every state of affairs, I used the next command within the terminal window:

sitespeed.io -b chrome -n 200 --browsertime.viewPort 320x480 -c native -d 1 -m 1 https://jeremywagner.me

There are many arguments right here, however the gist is that I’m testing my website’s URL utilizing Chrome. The take a look at can be run 200 occasions for every of the three situations, and use a viewport dimension of 320×480. For a full record of sitespeed.io’s runtime choices, try their documentation.

The take a look at outcomes#section4

We’re monitoring three facets of web page efficiency: the whole time it takes for the web page to load, the period of time it takes for the DOMContentLoaded occasion to fireplace on the consumer, and the period of time it takes for the browser to start portray the web page.

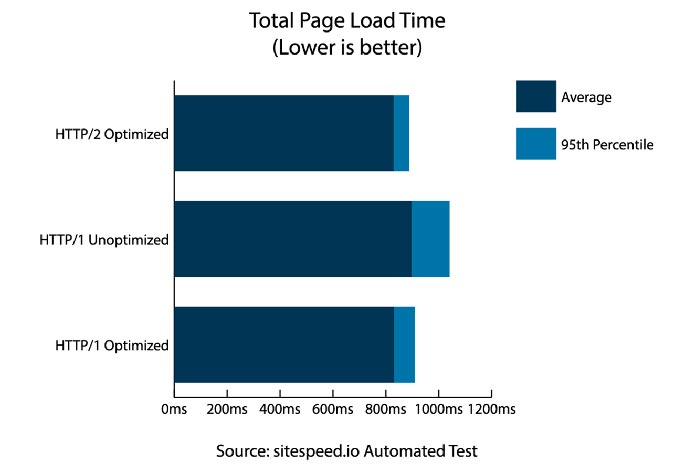

First, let’s take a look at whole web page load occasions for every state of affairs (Fig. 1).

This graph illustrates a pattern that you simply’ll see in a while. The situations optimized for HTTP/1 and HTTP/2 show comparable ranges of efficiency when working on their respective variations of the protocol. The slowest state of affairs runs on HTTP/1, but has been optimized for HTTP/2.

In these graphs, we’re plotting two figures: the common and the ninety fifth percentile (which means load occasions are under this worth 95% of the time). What this knowledge tells me is that if I moved my website to HTTP/2 however didn’t optimize for HTTP/2-incompatible browsers, common web page load time for that section of customers can be 10% slower 95% of the time. And 5% of the time, web page loading is likely to be 15% slower.

For a small and uncomplicated website resembling my weblog, this will likely appear insignificant, nevertheless it actually isn’t. What if my website is experiencing heavy site visitors? Altering how I ship content material to be extra inclusive of customers with restricted capabilities could possibly be the distinction between a consumer who sticks round or one who decides to go away after ready too lengthy.

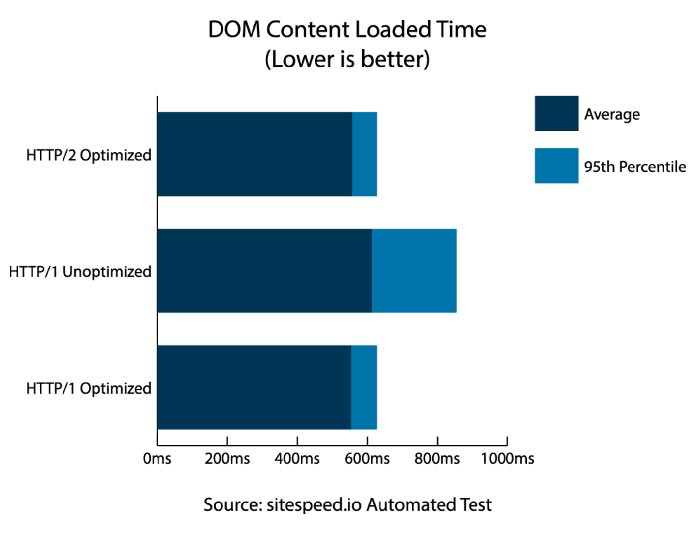

Let’s check out how lengthy it takes for the DOM to be prepared in every state of affairs (Fig. 2).

Once more, we see comparable ranges of efficiency when a website is optimized for its specific protocol. For the state of affairs wherein the location is optimized for HTTP/2 however runs on HTTP/1, the DOMContentLoaded occasion fires 10% extra slowly than both of the “optimum” situations. This happens 95% of the time. 5% of the time, nevertheless, it could possibly be as a lot as 26% slower.

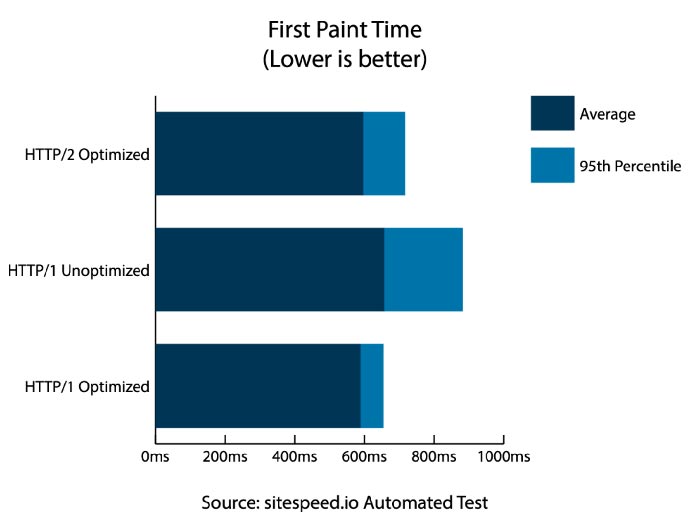

What about time to first paint? That is arguably a very powerful efficiency metric as a result of it’s the primary level the consumer really sees your web site. What occurs to this metric once we optimize our content material supply technique for every protocol model? (Fig. 3)

The pattern persists but once more. Within the HTTP/1 Unoptimized state of affairs, paint time is 10% longer than both of the optimized situations 95% of the time—and practically twice that lengthy in the course of the different 5%.

A ten– 20% delay in web page paint time is a severe concern. In the event you had the power to hurry up rendering for a big section of your viewers, wouldn’t you?

One other manner to enhance this metric for HTTP/1 customers is to implement crucial CSS. That’s an possibility for me, since my website’s CSS is 2.2KB after Brotli compression. On HTTP/2 websites, you’ll be able to obtain a efficiency profit just like inlining through the use of the protocol’s Server Push characteristic.

Now that we’ve examined the efficiency implications of tailoring our content material supply to the consumer’s HTTP protocol model, let’s discover ways to routinely generate optimized belongings for each segments of your customers.

Construct instruments may also help#section5

You’re busy sufficient as it’s. I get it. Sustaining two units of belongings optimized for 2 various kinds of customers appears like an enormous ache. However that is the place a construct instrument like gulp comes into the image.

In the event you’re utilizing gulp (or different automation instruments like Grunt or webpack), chances are high you’re already automating stuff like script minification (or uglification, relying on how aggressive your optimizations are.) Beneath is a generalized instance of how you would use the gulp-uglify and gulp-concat plugins to uglify recordsdata, after which concatenate these separate uglified belongings right into a single one.

var gulp = require("gulp"),

uglify = require("gulp-uglify"),

concat = require("gulp-concat");

// Uglification

gulp.job("uglify", perform(){

var src = "src/js/*.js",

dest = "dist/js";

return gulp.src(src)

.pipe(uglify())

.pipe(gulp.dest(dest));

});

// Concatenation

gulp.job("concat", ["uglify"], perform(){

var src = "dist/js/*.js",

dest = "dist/js";

return gulp.src(src)

.pipe(concat("script-bundle.js"))

.pipe(gulp.dest(dest));

});On this instance, all scripts within the src/js listing are uglified by the uglify job. Every processed script is output individually to dist/js. When this occurs, the concat job kicks in and bundles all of those scripts right into a single file named script-bundle.js. You’ll be able to then use the protocol detection method proven partly considered one of this text collection to vary which scripts you serve primarily based on the customer’s protocol model.

In fact, that’s not the one factor you are able to do with a construct system. You possibly can take the identical method to bundling along with your CSS recordsdata, and even generate picture sprites from separate photographs with the gulp.spritesmith plugin.

The takeaway right here is {that a} construct system makes it simple to take care of two units of optimized belongings (amongst many different issues, in fact). It may be performed simply and routinely, liberating you as much as concentrate on growth and enhancing efficiency to your website’s guests.

We’ve seen how an HTTP/2-optimized website can carry out poorly for customers with HTTP/2-incompatible browsers.

However why are the capabilities of those customers restricted? It actually relies upon.

Socioeconomic situations play a giant function. Customers have a tendency to purchase the standard of system they will afford, so the capabilities of the “common” system varies considerably, particularly between growing and developed nations.

Lack of economic sources can also drive customers to restricted knowledge plans and browsers like Opera Mini that reduce knowledge utilization. Till these browsers help HTTP/2, a big proportion of customers on the market might by no means come on board.

Updating cellphone functions can be problematic for somebody on a restricted knowledge plan. Quick adoption can’t be anticipated, and a few might forego browser updates in favor of preserving the remaining allotment of knowledge on their plans. In growing nations, web infrastructure high quality is considerably behind tempo with what’s within the developed world.

We are able to’t change the conduct of each consumer to go well with our growth preferences. What we can do, although, is establish the viewers section that may’t help HTTP/2, then make an knowledgeable determination whether or not or not it’s well worth the effort to adapt how we ship content material to them. If a sizeable portion of the viewers makes use of HTTP/2-incompatible browsers, we are able to change how we ship content material to them. We are able to ship an optimized expertise and provides them a leg up, and we are able to accomplish that whereas offering efficiency benefits for these customers who can help HTTP/2.

There are a lot of individuals on the market who face vital challenges whereas shopping the online. Earlier than we totally embrace new applied sciences, let’s work out how we are able to accomplish that with out leaving a big section of our viewers in a lurch. The reward in what we do comes from offering options that work for everybody. Let’s undertake new applied sciences responsibly. It behooves us all to behave with care.

Study extra about boosting website efficiency with Jeremy’s e-book Net Efficiency in Motion. Get 39% off with code ALAWPA.