One morning I discovered a bit time to work on nodemon and noticed a brand new pull request that mounted a small bug. The one drawback with the pull request was that it didn’t have assessments and didn’t comply with the contributing tips, which ends up in the automated deploy not working.

Article Continues Under

The contributor was clearly extraordinarily new to Git and GitHub and simply the small change was nicely out of their consolation zone, so after I requested for the modifications to stick to the best way the challenge works, all of it type of fell aside.

How do I alter this? How do I make it simpler and extra welcoming for outdoor builders to contribute? How do I ensure contributors don’t really feel like they’re being requested to do greater than crucial?

This final level is essential.

The actual value of a one-line change#section2

Many instances in my very own code, I’ve made a single-line change that may very well be a matter of some characters, and this alone fixes a difficulty. Besides that’s by no means sufficient. (In reality, there’s often a correlation between the maturity and/or age of the challenge and the quantity of extra work to finish the change as a result of rising complexity of techniques over time.)

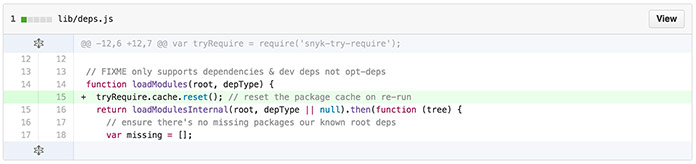

A current challenge in my Snyk work was mounted with this single line change:

On this specific instance, I had solved the issue in my head in a short time and realized that this was the repair. Besides that I needed to then write the check to assist the change, not solely to show that it really works however to forestall regression sooner or later.

My tasks (and Snyk’s) all use semantic launch to automate releases by commit message. On this specific case, I needed to bump the dependencies within the Snyk command line after which commit that with the fitting message format to make sure a launch would inherit the repair.

All in all, the one-line repair was this: one line, one new check, examined throughout 4 variations of node, bump dependencies in a secondary challenge, guarantee commit messages have been proper, after which look forward to the secondary challenge’s assessments to all go earlier than it was routinely printed.

Put merely: it’s by no means simply a one-line repair.

Serving to these first pull requests#section3

Doing a pull request (PR) into one other challenge may be fairly daunting. I’ve obtained a good quantity of expertise and even I’ve began and aborted pull requests as a result of I discovered the chain of occasions main up to a whole PR too complicated.

So how can I alter my tasks and GitHub repositories to be extra welcoming to new contributors and, most essential, how can I make that first PR straightforward and protected?

Situation and pull request templates#section4

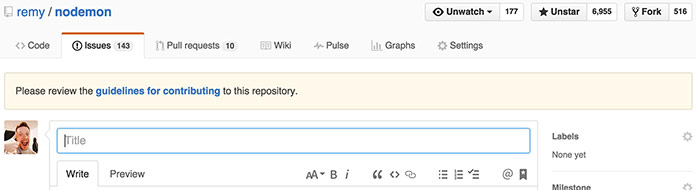

GitHub lately introduced assist for challenge and PR templates. These are an important begin as a result of now I can particularly ask for gadgets to be checked off, or data to be crammed out to assist diagnose points.

Right here’s what the PR template appears like for Snyk’s command line interface (CLI) :

- [ ] Prepared for evaluate

- [ ] Follows CONTRIBUTING guidelines

- [ ] Reviewed by @remy (Snyk inner staff)

#### What does this PR do?

#### The place ought to the reviewer begin?

#### How ought to this be manually examined?

#### Any background context you wish to present?

#### What are the related tickets?

#### Screenshots

#### Extra questions

That is partly primarily based on QuickLeft’s PR template. These things should not laborious stipulations on the precise PR, but it surely does assist in getting full data. I’m slowly including these to all my repos.

As well as, having a CONTRIBUTING.md file within the root of the repo (or in .github) means new points and PRs embody the discover within the header:

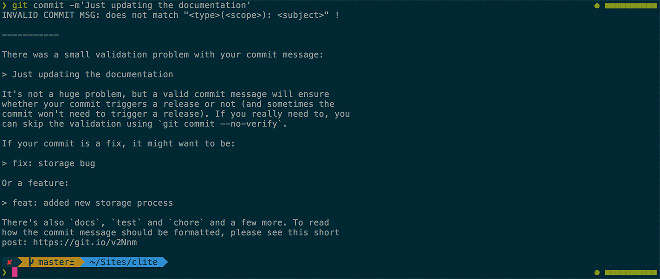

For context: semantic launch will learn the commits in a push to grasp, and if there’s a feat: commit, it’ll do a minor model bump. If there’s a repair: it’ll do a patch model bump. If the textual content BREAKING CHANGE: seems within the physique of a commit, it’ll do a serious model bump.

I’ve been utilizing semantic launch in all of my tasks. So long as the commit message format is correct, there’s no work concerned in making a launch, and no work in deciding what the model goes to be.

One thing that none of my repos traditionally had was the power to validate contributed commits for formatting. In actuality, semantic launch doesn’t thoughts in case you don’t comply with the commit format; they’re merely ignored and don’t drive releases (to npm).

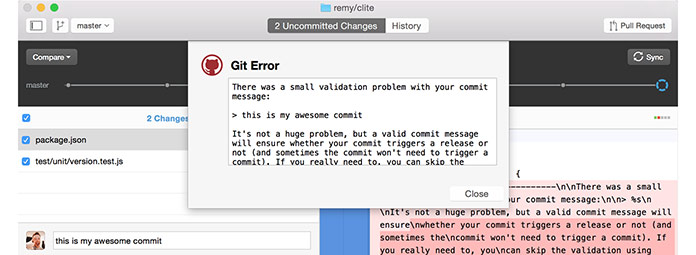

I’ve since come throughout ghooks, which is able to run instructions on Git hooks, specifically utilizing a commit-msg hook validate-commit-msg. The set up is comparatively easy, and the suggestions to the consumer is actually good as a result of if the commit wants tweaking to comply with the commit format, I can embody examples and hyperlinks.

Right here’s what it appears like on the command line:

…and within the GitHub desktop app (for comparability):

That is work that I can load on myself to make contributing simpler, which in flip makes my job simpler on the subject of managing and merging contributions into the challenge. As well as, for my tasks, I’m additionally including a pre-push hook that runs all of the assessments earlier than the push to GitHub is allowed. That method if new code has damaged the assessments, the creator is conscious.

To see the modifications required to get the output above, see this commit in my present tinker challenge.

There are two additional areas price investigating. The primary is the commitizenproject. Second, what I’d actually prefer to see is a GitHub bot that would routinely touch upon pull requests to say whether or not the commits are okay (and if not, direct the contributor on how one can repair that drawback) and in addition to point out how the PR would have an effect on the discharge (i.e., whether or not it will set off a launch, both as a bug patch or a minor model change).

Together with instance assessments#section6

I believe this is perhaps the crux of drawback: the shortage of instance assessments in any challenge. A check could be a minefield of challenges, comparable to these:

- figuring out the check framework

- figuring out the applying code

- figuring out about testing methodology (unit assessments, integration, one thing else)

- replicating the check setting

One other challenge of mine, inliner, has a disproportionately excessive price of PRs that embody assessments. I put that right down to the benefit with which customers can add assessments.

The contributing information makes it clear that contributing doesn’t even require that you simply write check code. Authors simply create a supply HTML file and the anticipated output, and the check routinely contains the file and checks that the output is as anticipated.

Including particular examples of how to write down assessments will, I imagine, decrease the barrier of entry. I’d hyperlink to some form of pattern check within the contributing doc, or create some type of harness (like inliner does) to make it straightforward so as to add enter and anticipated output.

Fixing widespread errors#section7

One thing I’ve additionally come to just accept is that builders don’t learn contributing docs. It’s okay, we’re all busy, we don’t at all times have time to pore over documentation. Heck, contributing to open supply isn’t straightforward.

I’m going to start out together with a brief doc on how one can repair widespread issues in pull requests. Typically it’s amending a commit message or rebasing the commits. That is straightforward for me to doc, and can permit me to level new customers to a walkthrough of how one can repair their commits.

In reality, most of these things are easy and never a lot work to implement. Certain, I wouldn’t drop the whole lot I’m doing and add them to all my tasks directly, however actually I’d embody them in every lively challenge as I work on it.

- Add challenge and pull request templates.

- Add ghooks and validate-commit-msg with normal language (most if not all of my tasks are node-based).

- Both make including a check tremendous straightforward, or not less than embody pattern assessments (for unit testing and probably for integration testing).

- Add a contributing doc that features notes about commit format, assessments, and something that may make the contributing course of smoother.

Lastly, I (and we) at all times must remember the fact that when somebody has taken day trip of their day to contribute code to our tasks—regardless of the state of the pull request—it’s an enormous deal.

It takes dedication to contribute. Let’s present some love for that.