During the last decade, net efficiency optimization has been managed by one indeniable guideline: the very best request is not any request. A really humble rule, simple to interpret. Each community name for a useful resource eradicated improves efficiency. Each src attribute spared, each hyperlink factor dropped. However the whole lot has modified now that HTTP/2 is on the market, hasn’t it? Designed for the fashionable net, HTTP/2 is extra environment friendly in responding to a bigger variety of requests than its predecessor. So the query is: does the outdated rule of decreasing requests nonetheless maintain up?

Article Continues Beneath

What has modified with HTTP/2?#section2

To know how HTTP/2 is totally different, it helps to learn about its predecessors. A short historical past follows. HTTP builds on TCP. Whereas TCP is highly effective and is able to transferring numerous knowledge reliably, the best way HTTP/1 utilized TCP was inefficient. Each useful resource requested required a brand new TCP connection. And each TCP connection required synchronization between the shopper and server, leading to an preliminary delay because the browser established a connection. This was OK in occasions when nearly all of net content material consisted of unstyled paperwork that didn’t load further sources, similar to photos or JavaScript information.

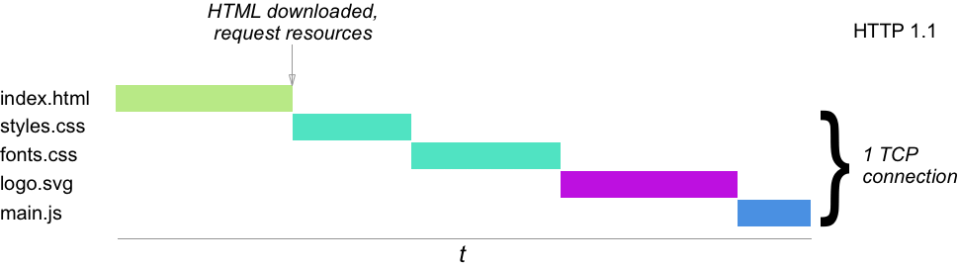

Updates in HTTP/1.1 attempt to overcome this limitation. Purchasers are ready to make use of one TCP connection for a number of sources, however nonetheless must obtain them in sequence. This so-called “head of line blocking” makes waterfall charts really appear like waterfalls:

Additionally, most browsers began to open a number of TCP connections in parallel, restricted to a fairly low quantity per area. Even with such optimizations, HTTP/1.1 is just not well-suited to the appreciable variety of sources of in the present day’s web sites. Therefore the saying “The perfect request is not any request.” TCP connections are pricey and take time. That is why we use issues like concatenation, picture sprites, and inlining of sources: keep away from new connections, and reuse present ones.

HTTP/2 is basically totally different than HTTP/1.1. HTTP/2 makes use of a single TCP connection and permits extra sources to be downloaded in parallel than its predecessor. Consider this single TCP connection as one broad tunnel the place knowledge is shipped by way of in frames. On the shopper, all packages get reassembled into their unique supply. Utilizing a few hyperlink components to switch type sheets is now as virtually environment friendly as bundling your entire type sheets into one file.

All connections use the identical stream, so additionally they share bandwidth. Relying on the variety of sources, this may imply that particular person sources might take longer to be transmitted to the shopper facet on low-bandwidth connections.

This additionally implies that useful resource prioritization is just not accomplished as simply because it was with HTTP/1.1: the order of sources within the doc had an impression on after they start to obtain. With HTTP/2, the whole lot occurs on the identical time! The HTTP/2 spec accommodates info on stream prioritization, however on the time of this writing, putting management over prioritization in builders’ arms remains to be within the distant future.

The perfect request is not any request: cherry-picking#section3

So what can we do to beat the shortage of waterfall useful resource prioritization? What about not losing bandwidth? Suppose again to the primary rule of efficiency optimization: the very best request is not any request. Let’s reinterpret the rule.

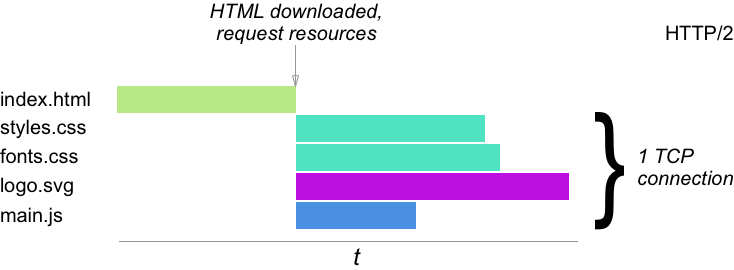

For instance, take into account a typical webpage (on this case, from Dynatrace). The screenshot beneath exhibits a bit of on-line documentation consisting of various parts: principal navigation, a footer, breadcrumbs, a sidebar, and the principle article.

On different pages of the identical web site, we now have issues like a masthead, social media shops, galleries, or different parts. Every element is outlined by its personal markup and magnificence sheet.

In HTTP/1.1 environments, we’d sometimes mix all element type sheets into one CSS file. The perfect request is not any request: one TCP connection to switch all of the CSS mandatory, even for pages the consumer hasn’t seen but. This may end up in an enormous CSS file.

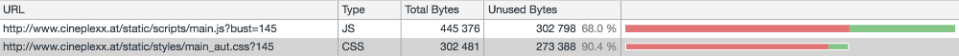

The issue is compounded when a web site makes use of a library like Bootstrap, which reached the 300 kB mark, including site-specific CSS on high of it. The precise quantity of CSS required by any given web page, in some circumstances, was even lower than 10% of the quantity loaded:

There are even instruments like UnCSS that goal to eliminate unused kinds.

The Dynatrace documentation instance proven in determine 3 is constructed with the corporate’s personal type library, which is tailor-made to the location’s particular wants versus Bootstrap, which is obtainable as a common goal resolution. All parts within the firm type library mixed add as much as 80 kB of CSS. The CSS really used on the web page is split amongst eight of these parts, totaling 8.1 kB. So although the library is tailor-made to the precise wants of the web site, the web page nonetheless makes use of solely round 10% of the CSS it downloads.

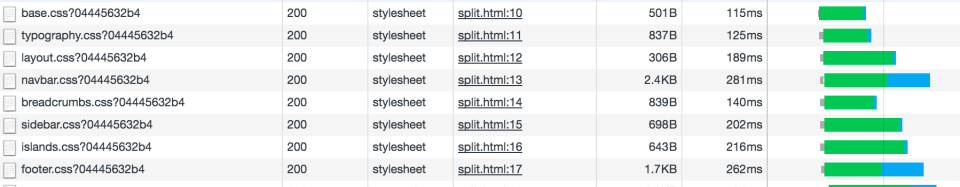

HTTP/2 permits us to be rather more choosy in relation to the information we need to transmit. The request itself is just not as pricey as it’s in HTTP/1.1, so we will safely use extra hyperlink components, pointing on to the weather used on that specific web page:

<hyperlink rel="stylesheet" href="/css/base.css">

<hyperlink rel="stylesheet" href="/css/typography.css">

<hyperlink rel="stylesheet" href="/css/structure.css">

<hyperlink rel="stylesheet" href="/css/navbar.css">

<hyperlink rel="stylesheet" href="/css/article.css">

<hyperlink rel="stylesheet" href="/css/footer.css">

<hyperlink rel="stylesheet" href="/css/sidebar.css">

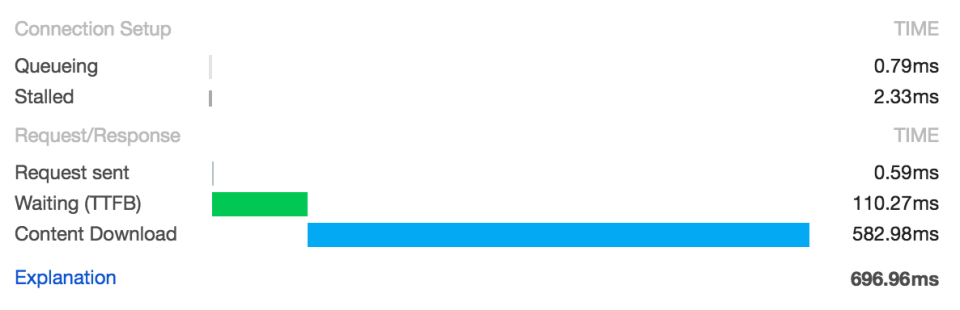

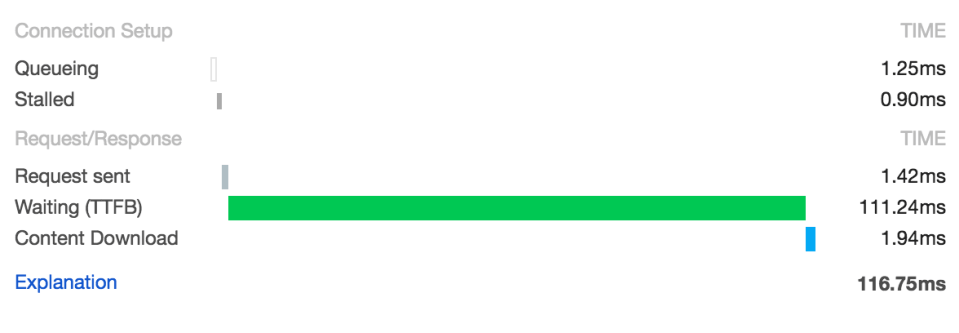

<hyperlink rel="stylesheet" href="/css/breadcrumbs.css">This, after all, is true for each sprite map or JavaScript bundle as properly. By simply transferring what you really want, the quantity of knowledge transferred to your web site may be decreased vastly! Evaluate the obtain occasions for bundle and single information proven with Chrome timings beneath:

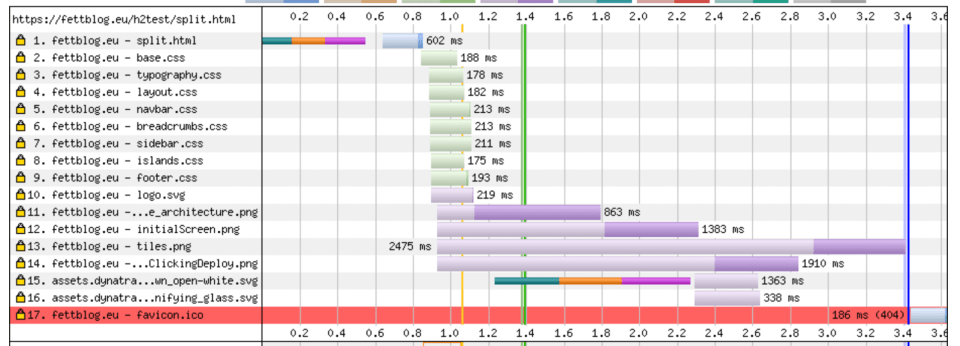

The primary picture exhibits that together with the time required for the browser to ascertain the preliminary connection, the bundle wants about 700 ms to obtain on common 3G connections. The second picture exhibits timing values for one CSS file out of the eight that make up the web page. The start of the response (TTFB) takes as lengthy, however for the reason that file is so much smaller (lower than 1 kB), the content material is downloaded virtually instantly.

This may not appear spectacular when just one useful resource. However as proven beneath, since all eight type sheets are downloaded in parallel, we nonetheless can save quite a lot of switch time when in comparison with the bundle method.

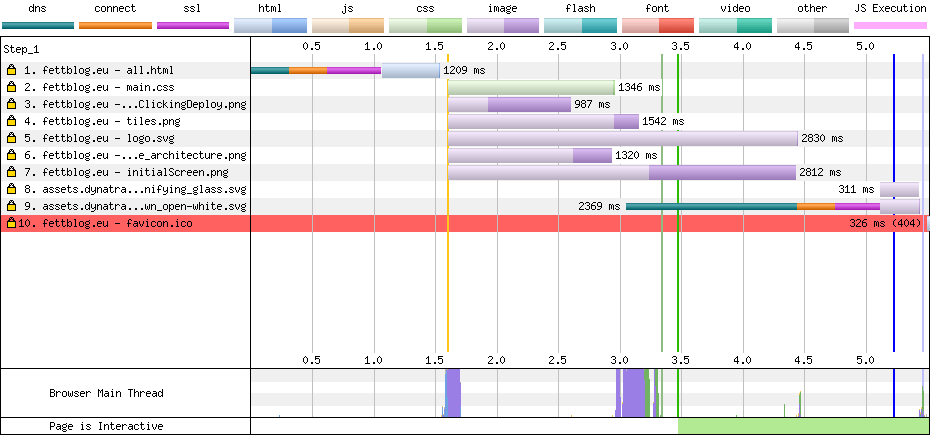

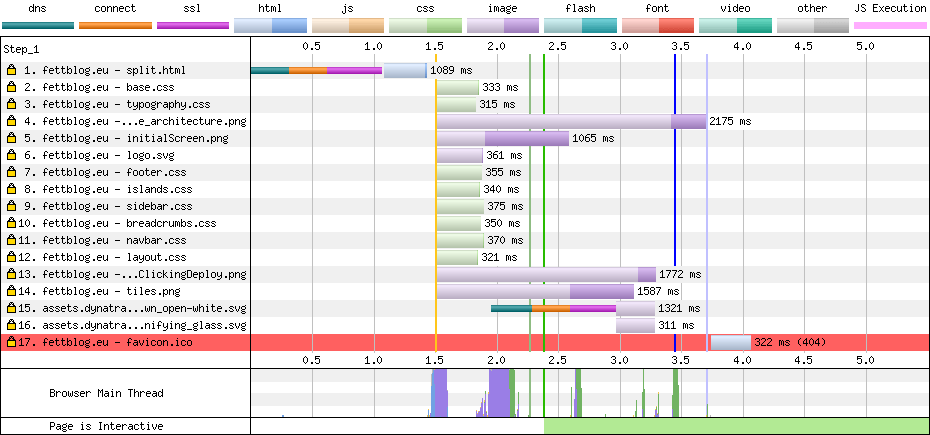

When operating the identical web page by way of webpagetest.org on common 3G, we will see the same sample. The total bundle (principal.css) begins to obtain simply after 1.5 s (yellow line) and takes 1.3 s to obtain; the time to first significant paint is round 3.5 seconds (inexperienced line):

Once we break up up the CSS bundle, every type sheet begins to obtain at 1.5 s (yellow line) and takes 315–375 ms to complete. Because of this, we will cut back the time to first significant paint by a couple of second (inexperienced line):

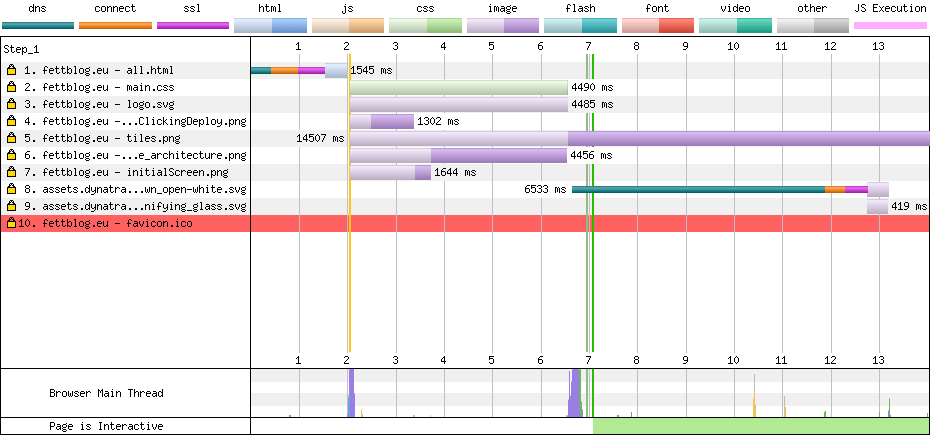

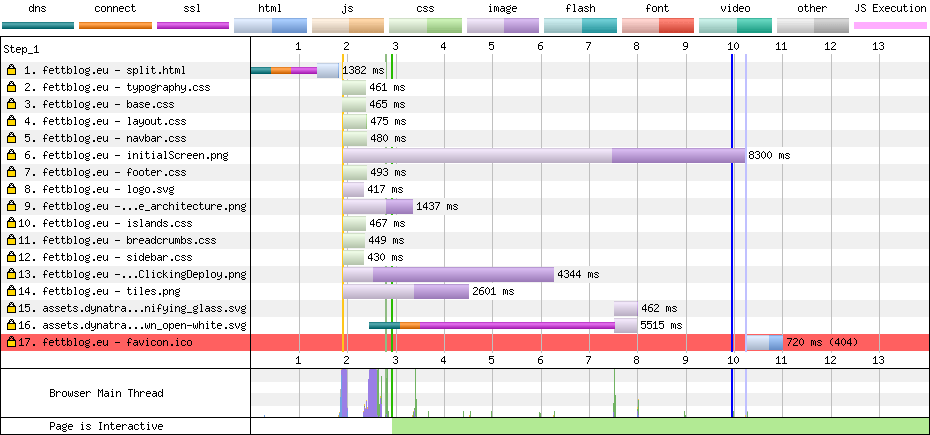

Per our measurements, the distinction between bundled and break up information has extra impression on sluggish 3G than on common 3G. On the latter, the bundle wants a complete of 4.5 s to be downloaded, leading to a time to first significant paint at round 7 s:

The identical web page with break up information on sluggish 3G connections by way of webpagetest.org ends in significant paint (inexperienced line) occurring 4 s earlier:

The attention-grabbing factor is that what was thought of a efficiency anti-pattern in HTTP/1.1—utilizing numerous references to sources—turns into a finest observe within the HTTP/2 period. Plus, the rule stays the identical! The which means adjustments barely.

The perfect request is not any request: drop information and code your customers don’t want!

It must be famous that the success of this method is strongly linked to the variety of sources transferred. The instance above used 10% of the unique type sheet library, which is a gigantic discount in file measurement. Downloading the entire UI library in split-up information may give totally different outcomes. For instance, Khan Academy discovered that by splitting up their JavaScript bundles, the general software measurement—and thus the switch time–turned drastically worse. This was primarily due to two causes: an enormous quantity of JavaScript information (near 100), and the customarily underestimated powers of Gzip.

Gzip (and Brotli) yields larger compression ratios when there may be repetition within the knowledge it’s compressing. Which means that a Gzipped bundle sometimes has a a lot smaller footprint than Gzipped single information. So if you will obtain an entire set of information anyway, the compression ratio of bundled belongings may outperform that of single information downloaded in parallel. Take a look at accordingly.

Additionally, pay attention to your consumer base. Whereas HTTP/2 has been broadly adopted, a few of your customers is likely to be restricted to HTTP/1.1 connections. They are going to undergo from break up sources.

The perfect request is not any request: caching and versioning#section4

Up to now with our instance, we’ve seen the best way to optimize the primary go to to a web page. The bundle is break up up into separate information and the shopper receives solely what it must show on a web page. This offers us the possibility to look into one thing individuals are inclined to neglect when optimizing for efficiency: subsequent visits.

On subsequent visits we need to keep away from re-transferring belongings unnecessarily. HTTP headers like Cache-Management (and their implementation in servers like Apache and NGINX) permit us to retailer information on the consumer’s disk for a specified period of time. Some CDN servers default that to a couple minutes. Some others to a couple hours or days even. The concept is that in a session, customers shouldn’t must obtain what they have already got previously (until they’ve cleared their cache within the interim). For instance, the next Cache-Management header directive makes certain the file is saved in any cache accessible, for 600 seconds.

Cache-Management: public, max-age=600We will leverage Cache-Management to be rather more strict. In our first optimization we determined to cherry-pick sources and be picky about what we switch to the shopper, so let’s retailer these sources on the machine for a protracted time frame:

Cache-Management: public, max-age=31536000The quantity above is one yr in seconds. The usefulness in setting a excessive Cache-Management max-age worth is that the asset will likely be saved by the shopper for a protracted time frame. The screenshot beneath exhibits a waterfall chart of the primary go to. Each asset of the HTML file is requested:

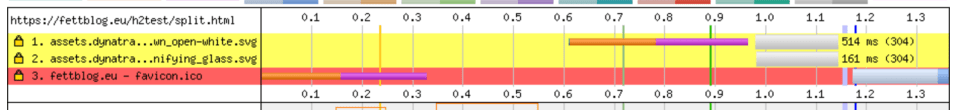

With correctly set Cache-Management headers, a subsequent go to will end in much less requests. The screenshot beneath exhibits that every one belongings requested on our take a look at area don’t set off a request. Property from one other area with improperly set Cache-Management headers nonetheless set off a request, as do sources which haven’t been discovered:

In the case of invalidating the cached asset (which, consequently, is without doubt one of the two hardest issues in laptop science), we merely use a brand new asset as an alternative. Let’s see how that might work with our instance. Caching works primarily based on file names. A brand new file identify triggers a brand new obtain. Beforehand, we break up up our code base into cheap chunks. A model indicator makes certain that every file identify stays distinctive:

<hyperlink rel="stylesheet" href="/css/header.v1.css">

<hyperlink rel="stylesheet" href="/css/article.v1.css">After a change to our article kinds, we’d modify the model quantity:

<hyperlink rel="stylesheet" href="/css/header.v1.css">

<hyperlink rel="stylesheet" href="/css/article.v2.css">A substitute for holding observe of the file’s model is to set a revision hash primarily based on the file’s content material with automation instruments.

It’s OK to retailer your belongings on the shopper for a protracted time frame. Nevertheless, your HTML ought to be extra transient generally. Sometimes, the HTML file accommodates the details about which sources to obtain. Must you need your sources to vary (similar to loading article.v2.css as an alternative of article.v1.css, as we simply noticed), you’ll have to replace references to them in your HTML. Widespread CDN servers cache HTML for now not than six minutes, however you’ll be able to resolve what’s higher suited in your software.

And once more, the very best request is not any request: retailer information on the shopper so long as doable, and don’t request them over the wire ever once more. Current Firefox and Edge editions even sport an immutable directive for Cache-Management, focusing on this sample particularly.

HTTP/2 has been designed from the bottom as much as tackle the inefficiencies of HTTP/1. Triggering a lot of requests in an HTTP/2 setting is now not inherently dangerous for efficiency; transferring pointless knowledge is.

To succeed in the complete potential of HTTP/2, we now have to take a look at every case individually. An optimization that is likely to be good for one web site can have a unfavourable impact on one other. With all the advantages that include HTTP/2 , the golden rule of efficiency optimization nonetheless applies: the very best request is not any request. Solely this time we check out the precise quantity of knowledge transferred.

Solely switch what your customers really want. Nothing extra, nothing much less.